What is RAG and Why Are LLMs Not Enough?

We highlight the limitations of LLMs and introduce RAG as a solution that enhances accuracy by accessing external knowledge sources.

In the rapidly evolving world of AI, Large Language Models (LLMs) like ChatGPT, Google Gemini, and Claude have taken center stage. Their ability to generate human-like text, answer complex questions, and assist in a variety of tasks is nothing short of remarkable. However, as powerful as these models are, they come with limitations. One of the most pressing issues is their inability to answer questions that require specialized or non-public information. This is where Retrieval-Augmented Generation (RAG) comes into play.

A Real-World Example: Why Did Buru Energy's Ungani 3 Well Fail in the Canning Basin, Western Australia?

Let’s dive into a practical example to illustrate this point. Suppose you ask Google Gemini, “Why did the Ungani 3 well fail?” Here’s a summary of the response:

Here is a screenshot of the exact response:

This response highlights a critical limitation of LLMs: they rely heavily on publicly accessible internet data. Despite the enormous costs associated with training these models—GPT-4, for example, was reportedly trained at a cost of $100 million—they still can't access or understand information that isn't included in their training data.

The Financial and Practical Limits of LLM Training

Training an LLM is not just expensive; it's also a time-consuming process. Given the exorbitant costs and the rapid pace of information generation, there's little financial incentive to continuously update these models with new data. So, what happens when we need to answer questions that require knowledge beyond what an LLM was originally trained on? Is there a way to leverage these powerful models to work with our own specialized documents, such as mineral relinquishment reports, exploration reports, or well completion reports?

The answer is yes, and this is where RAG comes into play.

A Metaphor: The Year 10 Student and the Open-Book Exam

To understand how RAG works, let's consider a metaphor. Imagine a Year 10 student who goes into a science exam. This student has a general knowledge of various subjects and a good command of English, which is her native language. She’s supposed to answer the exam questions based on what she has studied beforehand—this is akin to how an LLM answers questions based on its training.

But what if this student walks into the wrong exam hall and finds herself taking a Petroleum Geology exam? She’s unlikely to know the answers and might try to guess, which would mostly lead to incorrect answers. In the LLM world, this is called "hallucination," where the model fabricates information when it doesn’t know the answer.

Now, what if we give this student a Petroleum Geology textbook and allow her to take an open-book exam? We might even give her extra time to find the right answers. The student would then read the questions, search for relevant information in the textbook, and use her existing knowledge to frame an answer. In the world of LLMs, this process is known as Retrieval-Augmented Generation (RAG).

What is RAG?

RAG combines the power of LLMs with access to external knowledge sources. Instead of relying solely on pre-existing knowledge, the model can search through additional documents to find the relevant context and generate a more accurate response.

The Power of RAG: A Closer Look at the Ungani 3 Well

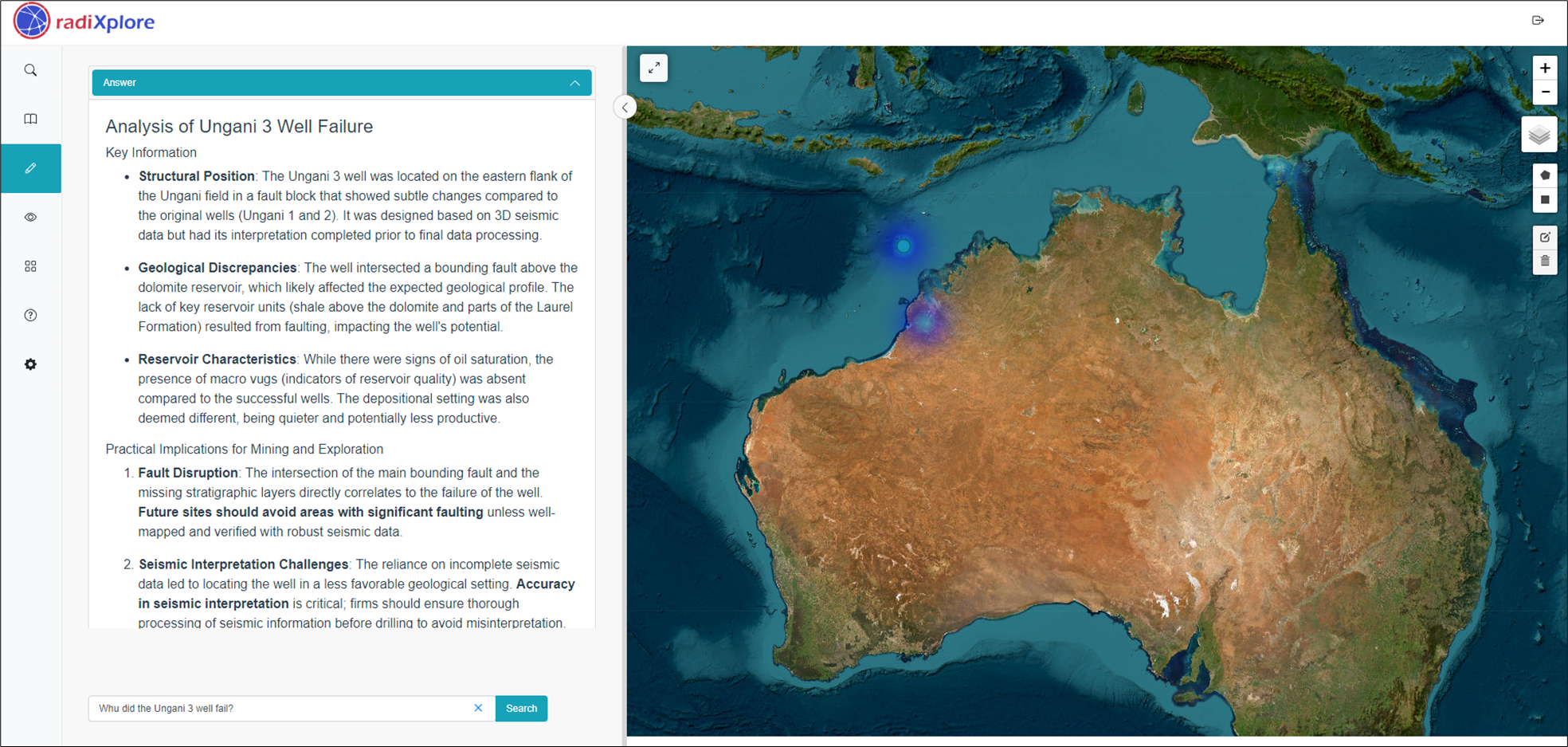

Let’s revisit our original question about the Ungani 3 well, but this time, we’ll allow the LLM to access Western Australia’s Onshore Petroleum Database (WAPIMS). WAPIMS contains over 1 million pages of documents from over 2000 wells.

We will use RadiXplore's RAG - RadixGPT to test ther question - “Why did the Ungani 3 well fail?”

Here’s the answer it generates:

Here is the answer for you to read:

Analysis of Ungani 3 Well Failure

Key Information

- Structural Position: The Ungani 3 well was located on the eastern flank of the Ungani field in a fault block that showed subtle changes compared to the original wells (Ungani 1 and 2). It was designed based on 3D seismic data but had its interpretation completed prior to final data processing.

- Geological Discrepancies: The well intersected a bounding fault above the dolomite reservoir, which likely affected the expected geological profile. The lack of key reservoir units (shale above the dolomite and parts of the Laurel Formation) resulted from faulting, impacting the well's potential.

- Reservoir Characteristics: While there were signs of oil saturation, the presence of macro vugs (indicators of reservoir quality) was absent compared to the successful wells. The depositional setting was also deemed different, being quieter and potentially less productive.

Practical Implications for Mining and Exploration

- Fault Disruption: The intersection of the main bounding fault and the missing stratigraphic layers directly correlates to the failure of the well. Future sites should avoid areas with significant faulting unless well-mapped and verified with robust seismic data.

- Seismic Interpretation Challenges: The reliance on incomplete seismic data led to locating the well in a less favorable geological setting. Accuracy in seismic interpretation is critical; firms should ensure thorough processing of seismic information before drilling to avoid misinterpretation.

- Core Recovery Issues: Difficulties in core recovery due to hard limestone and gummy shales hindered the evaluation of reservoir potential. Investing in better drilling technologies or adapting drilling methodology to deal with challenging formations could improve recovery rates in future ventures.

- Comparative Log Analysis: Ungani 3's petrological comparison to successful wells shows the importance of understanding variances in depositional environments. Future explorations should incorporate data from surrounding successful wells to evaluate potential reservoir quality.

Conclusion

The Ungani 3 well failed primarily due to its unfortunate geological setting influenced by faulting, incomplete stratigraphic understanding, and challenges in core recovery. For future drilling campaigns, greater emphasis should be placed on complete seismic interpretation, understanding local fault dynamics, and adapting drilling strategies to enhance core recovery and reservoir characterization.

This response is far more detailed and relevant because the LLM was able to access specific, non-public data to generate the answer. The LLM tackled the question by first understanding it, then searching for relevant text that could provide answers, and finally interpreting that information to generate a coherent and relevant response.

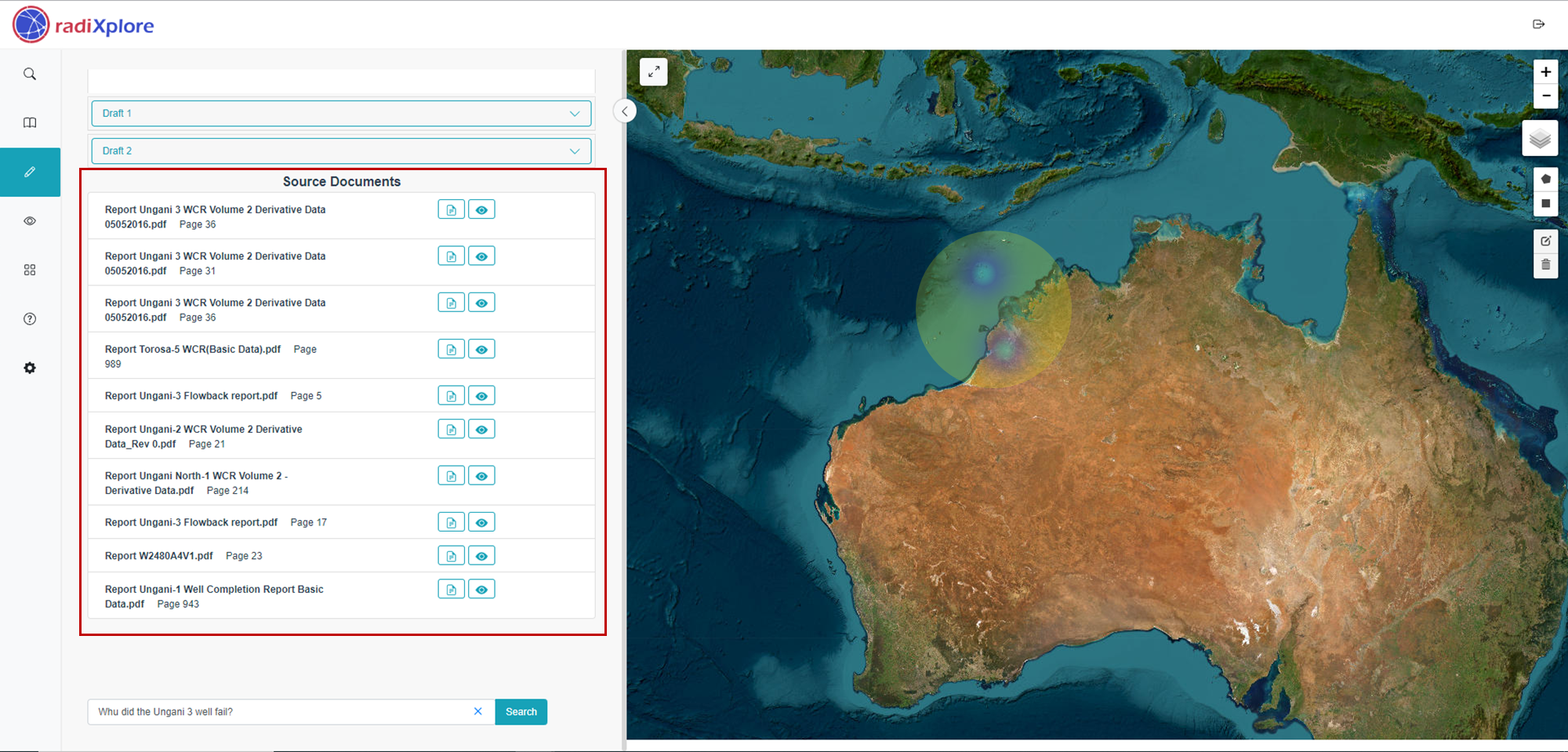

To audit the answer, you can ask the LLM to return the text it decided was relevant. In this example you can see it used reports from Ungani 3 and Ungani 2 to come up with the answer. It also plotted the location of the reports on the map.

The Implications of RAG: Answering the Unanswered Questions

While RAG shows us a way to overcome some of the limitations of LLMs, it also raises several new questions:

- Why can't we fine-tune LLMs with new knowledge, just like we do with image datasets?

- How can a student without prior knowledge of geology locate relevant text during an open-book exam?

- Can Retrieval-Augmented Generation (RAG) models match the versatility of LLMs in answering questions?

- What drives companies to continually train new LLM models, rather than leveraging existing ones?

Stay tuned for upcoming blog posts, where we'll delve into these questions and provide insightful answers.